[Summary: a brain-dump of thoughts on approaches to data standardisation relevant in the current coronavirus context.]

Over the last few weeks I’ve talked with a number of initiatives that are seeking to bring greater coherence to data collection on the impacts that coronavirus is having on their constituencies. Thousands of organisations, from chambers of commerce, to charity networks, and international agencies, are sending out surveys, or soliciting inputs, to help them understand the social, economic, organisational and operational impacts of the current pandemic – and to start charting ways forward in response.

This has led to a number of conversations asking how data standards could help. Common fears of wasted effort in duplicate data collection, missed insights from siloed data, or confusion created by incompatible categorisations, are all being compounded by the rapid data collection needs in this crisis. Yet, creating new standards can be a time-consuming process: involving in-depth negotiation of different user needs and capacities, careful drafting of definitions, and rigorous testing of schemas, in order to develop something that can function as an equitable tool for long-term communication and collaboration. That doesn’t mean, however, that it’s not possible to iterate towards more aligned and standardised data right now.

In this post I’ll try and set out a few (non-exhaustive) considerations on where some of the data standardisation practices I’ve engaged with over recent years fit in the current landscape, and some approaches to move towards aligning data collection initiatives.

Documentation, documentation, documentation

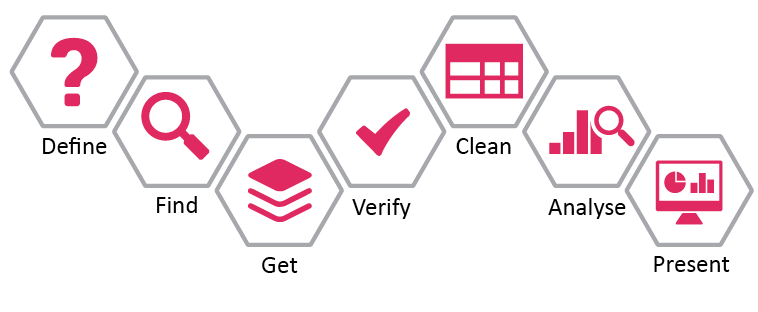

There are a couple of different parts of a data standard, including definitions that describe what the data should cover, and what each field is about and schemas that determine how the data should be encoded, serialised and shared. But it is documentation that brings these together, and makes them widely usable.

Good documentation should allow people designing data collection instruments (surveys, studies etc.) to quickly identify the building blocks of standardisation that they can draw upon, and should make following the standard the path of least resistance, rather than an uphill struggle.

Ideally documentation should be clearly versioned, and, if intended for global use, published in ways that support language translation.

Start from user needs

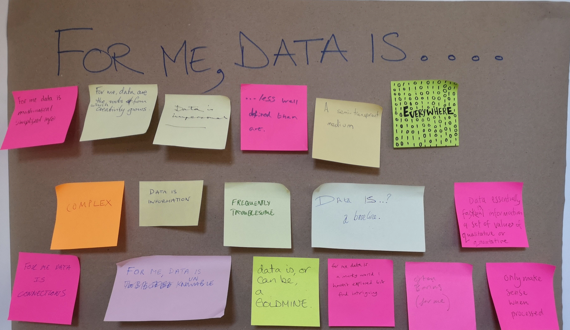

It’s easy to fall into the trap of being ‘data driven’, and trying to work out ways to bring together ’all the data’ by imposing top-down structures on data collection or aggregation. But, in working out where to prioritise alignment of definitions and structures it’s crucial to be driven user need. In a crisis context, it may help to identify the primary user need that data pipelines are being built to meet (e.g. a dashboard for operational decision making), and secondary user needs that is is desirable to meet too (e.g. evaluating whether support has been provided equitably; gathering baselines for future research; supporting advocacy for funding certain needs). This will help guide decisions on…

…’just enough standardisation’

Standards are about the distribution of costs and benefits between data producers, intermediaries and data users. Without any standards, data users wanting to draw on data from different sources have to do all the work of reconciling differences and inconsistencies – and sometimes find different datasets are simply irreconcilable. Where multiple datasets have compatible definitions, but different schemas, if may be possible for intermediaries to do the work of creating a consistent dataset by standardising non-standard data. Where data produces are made responsible for data standardisation, they have to do the work of reconciling their own business needs and local definitions, with the definitions and structures provided by a standard.

In the early stages of a crisis, the focus should be on what intermediaries can do: keeping the burden on data producers and users as low as possible, and focussing only on essential standardisation (guided by an understanding of user needs). By seeking to reconcile data from different sources, intermediaries will quickly learn which gaps in data alignment or standardisation are most costly to creating interoperable datasets.

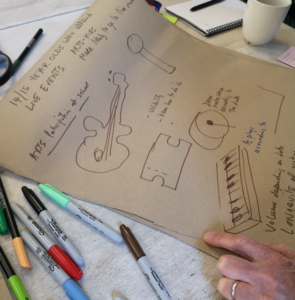

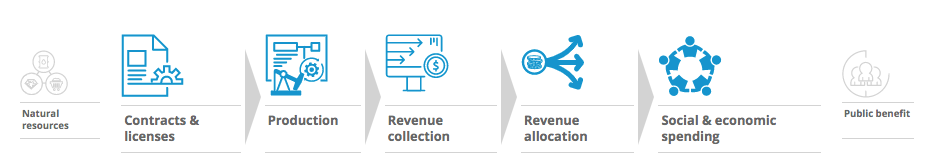

Whilst adopting standards like the Open Contracting Data Standard or Beneficial Ownership Data Standard involves working with organisations over many months and even years to align their data (and in some cases, underlying business processes) with a shared model – in a crisis response, data producers need light-weight building blocks that make their job easier – giving them content to copy and paste into surveys, or data structures that can be easily implemented.

One well-developed approach for alignment in a crisis context comes from HXL – the Humanitarian eXchange Language which provides a simple approach to mark-up columns in spreadsheets using a collection of known # hash-tags, and then provides tools to combine and filter tagged data.

(For more on ‘just enough’ thinking see Rachel Coldicutt’s post on just enough internet)

(Critically) re-use existing standards

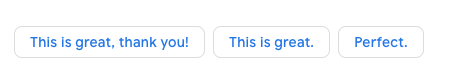

It’s rare that you will need to ‘invent’ any standards from scratch: standardisation is often an assembly job: working out which existing standards to align with and which pieces are aligned enough to work together. As a starting point I often turn to schema.org, the ad-hoc effort by search engines to create a common (and relatively loose) vocabulary of terms to describe everything from people, local businesses and books, to pandemic related data, or I look at conventions at use in existing datasets in the domain I’m helping create data models for.

Certain lower-level conventions, like using ISO Dates, unicode for text, and ISO language and country codes, are also worth encouraging and documenting: although in most cases as long as a data source is internally consistent in how it encodes countries, dates, languages and so-on, intermediaries will be able to more-or-less map the data to common codes over the short-term.

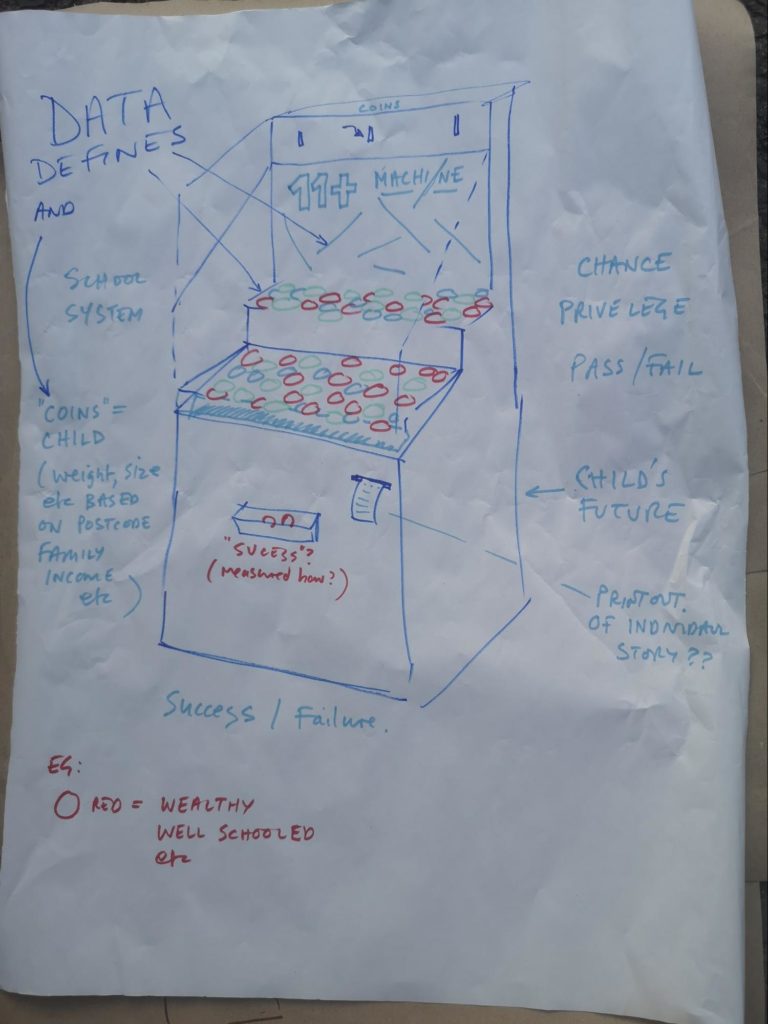

I say that one should ‘critically’ re-use existing standards, because, as the fantastic Data Feminism book underscores, definitions of data are about power: about whose lived experience and accounts of the world will be represented and shared. There is often a balance to strike between adopting common ways of representing the world, and challenging oppressive and problematic representations.

Particularly when building standards for use across national and cultural boundaries, this calls for an awareness of the many falsehoods embedded in data models, and consideration of the embedded assumptions in off-the-shelf data models. It can also call for a sensitivity to when standards, even in a crisis, should not take the path of least resistance, but should introduce some friction in deciding which categories to use, or how to disaggregate data. For example, where user needs (and here is where considering diverse secondary user needs can be important, as ‘primary user needs’ may often represent dominant power perspectives) require an understanding of how data varies by gender, or the ability to provide intersectional disaggregation, then standards should make clear how this should be recorded and shared.

Look for the keys

One way to lower the burden on data collectors is to look for the keys that unlock additional existing open datasets. For example:

- Postcodes in many countries allow data to be geocoded, and allow you to integrate a range of local classifications and statistics. In the UK, collecting the postcode of where a service is delivered allows you to look up the socio-economic status of the are, the local authority responsible for service delivery there, and a whole host of other information. In other countries, location data may be possible to match with satellite observation data to infer other relevant classifications for a survey respondent.

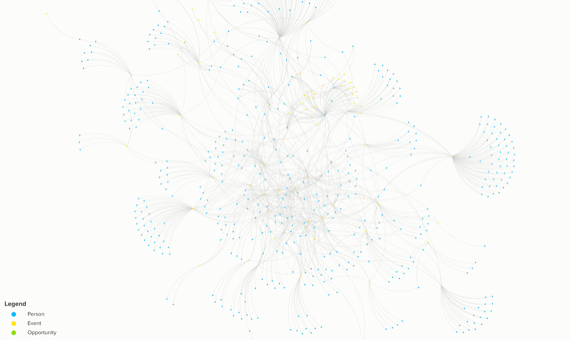

- Organisation identifiers – which, if collected and well validated, can be reconciled against public databases to find information on companies, charities and other entities. In the UK, a Charity number can be used to look up classification data on the organisation’s beneficiaries taken from annual charity returns. For many nations, company numbers can be reconciled against OpenCorporates to provide detailed corporate information.

- URLS and Social Media IDs can be useful in some use-cases to crawl web pages and social network and find signals about the networks an organisation is part of, of the topics they work on.

Each sector and domain is also likely to have some of its own ‘keys’ that can hook into existing datasets (e.g. the Common Procurement Vocabulary for classifying public procurements in Europe). If you are lucky, they will be attached to relevant open datasets.

Care still needs to be taken to consider gaps in the lookup data (e.g. some countries lack open corporate register data; satellite data coverage varies; not all organisations have websites), and to avoid introducing biases through faulty assumptions (e.g. if assuming the ‘register office’ postcode of UK charities is where their beneficiaries are, then it looks like London gets more funding than it does). It’s also important to consider how easy it will be for those providing data to enter it. For example, do organisations know their registration number? (On the organisation identifiers point, this is one of the reasons I was involved in creating org-id.guide and there remains a lot still to do in this area).

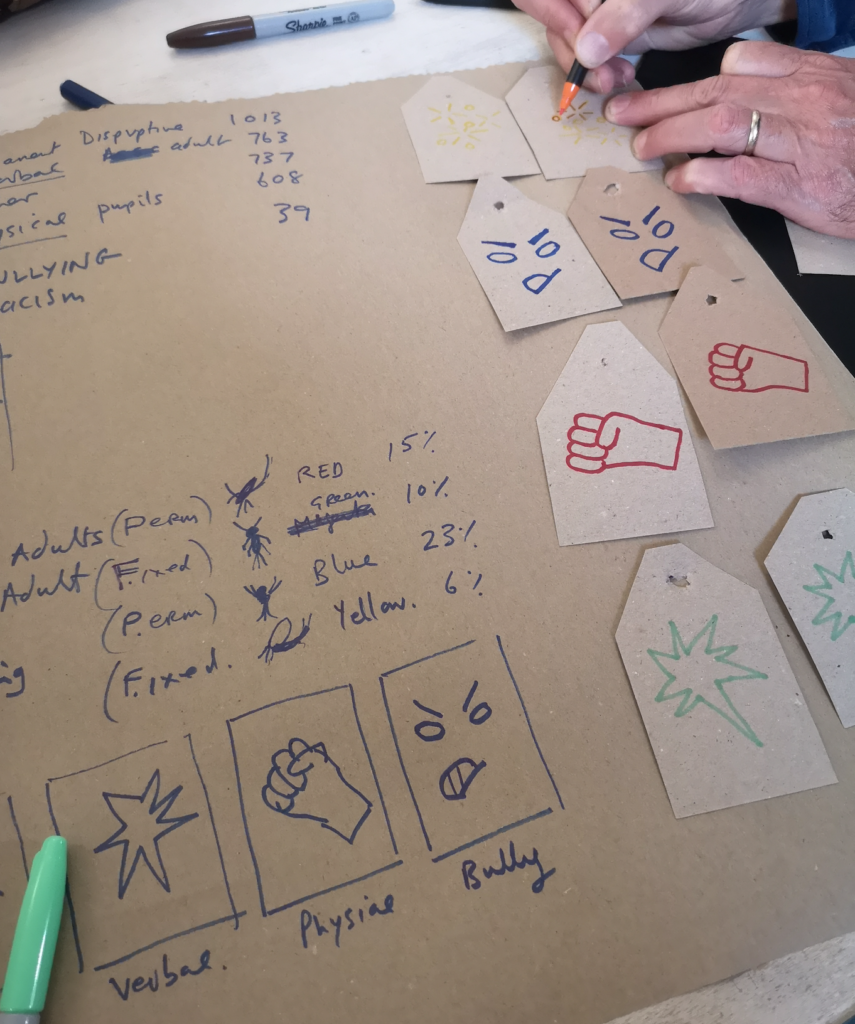

Decide on your approach to categories

At the heart of many standardisation processes is classification: sorting needs, organisations, events or people into categories. Standardising categories can be notoriously difficult: and is often hard to do in a rush. You might find there are existing classification schemes you can draw upon, or you might find a need to create your own (or, as LandVoc has done, albeit over a number of years, to engage with an existing classification scheme to get the elements you need included).

Good documentation of the boundaries of a category (ideally with examples) is vital for them to be used in interoperable ways.

Many of the standards I’ve worked on have stepped back from settling categorisation debates, but representing classification elements in terms of:

- A vocabulary – to allow different datasets to use different classification schemes

- A code – that stays constant across languages

- A label – that can be translated into local languages

This offers a way to at least avoid two people talking about different things with the same terms, but leaves the alignment problem to later.

In an ideal world, a rapid standardisation project might be able to provide ‘good enough’ categories for data collectors to start with, but then offer them some level of flexibility so that individual data collection exercises can address their local user needs by adapting core categorisations.

Semantic standards such as SKOS have a lot to offer to efforts to bring together data using heterogenous classification schemes: allowing not only hierarchical relationships (i.e. the ability to add a ‘narrower’ concept under a headline category), but also broad and narrow matches between neighbouring concepts. However, tools and skills for working well with this kind of data and classification structure are, in my experience, quite scarce.

Meta-data matters

One of the most important things to help intermediaries align different datasets is ‘data about the data’. Knowing who collected a dataset (ideally with ability to contact them), knowing when and where it was collected, and ideally having pointers to the survey forms or data collection instruments used can make the process of ingesting and reconciling disparate datasets at lot, lot easier.

Conventions like MetaTab provide an easy way to get started providing standardised meta-data when circulating spreadsheets, and there are well established standards for meta-data in most domains.

Meta-data should also include clear information on restrictions or permissions that apply to re-use of a dataset, which brings me onto:

Don’t forget standards of data governance

The first question to ask before making use of any dataset that might contain sensitive information from individuals or organisations is: do I have the right to use this data? Does using or sharing this data (or analysis based on it), put anyone at risk?

As the responsible data initiative puts it, there is a:

…collective duty to account for unintended consequences of working with data by:

1) prioritising people’s rights to consent, privacy, security and ownership when using data in social change and advocacy efforts,

2) implementing values and practices of transparency and openness.

Working out early on a set of shared procedures for assessing the need for, obtaining and recording consents from data subjects for data sharing and re-use can avoid hitting barriers later on. This might take a number of forms, such as:

- Suggested privacy policy terms that describe how data might be shared and re-used;

- Identifying the different states that consent might take (.e.g. consent for data to be ‘shared’ with identified partners, or consent for non-personal data to be ‘open’ – drawing on the ODI’s data spectrum and how these should be encoded in each relevant row of a dataset;

- Adding a section to meta-data templates for those sharing data to indicate who else data can be shared with, and if any fields should be masked from an open version of a dataset.

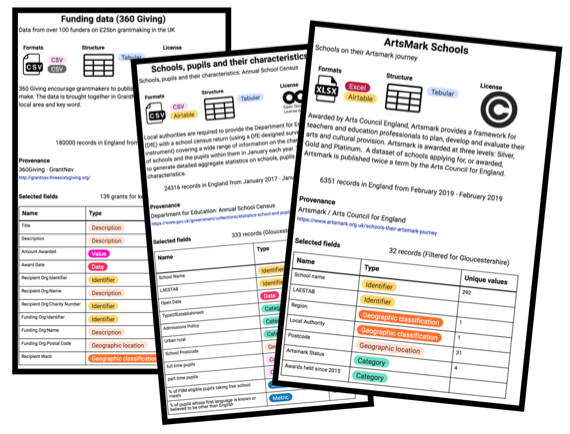

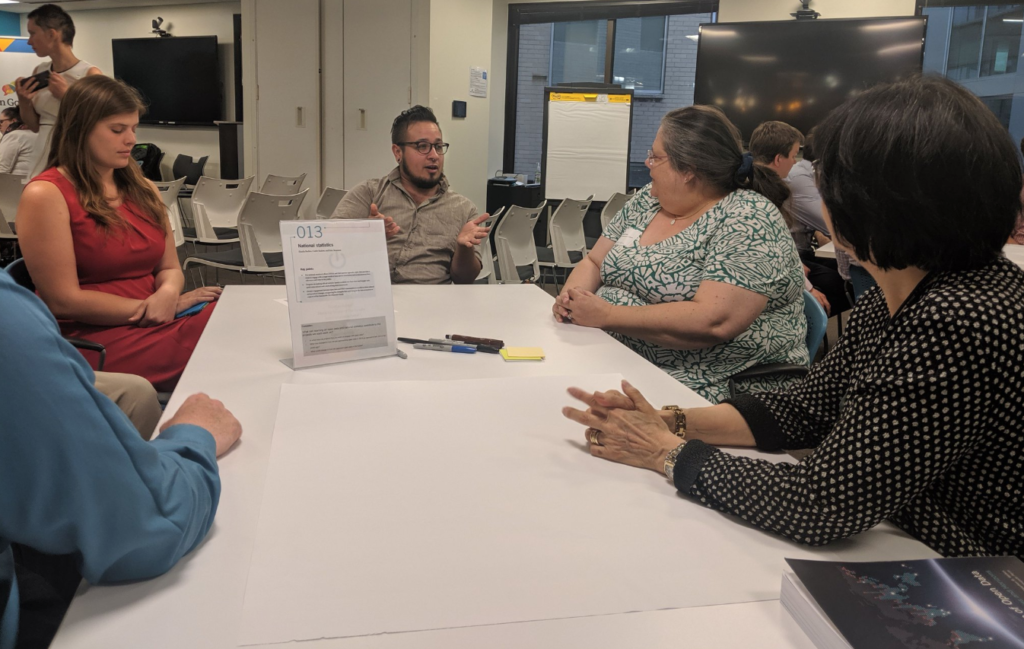

Standards are about people

Lastly, but by no means least – it is important to think of standards as a process, not a product. That documentation I mentioned at the start? That’s not for users: that’s for you. Because most of the time people don’t read documentation: they don’t have the time, or don’t know where to start. In reality, most of the standards I’ve worked on require conversations, engagement and feedback to help people align their data with them.

If someone is designing a data collection survey, the prime opportunity for standardisation is between their first draft, and it going out in the field. If you can get into a conversation then, and provide prioritised feedback on how it can align more with the documented standard, how it could incorporate some ‘key fields’ that will unlock other data, or how the consent questions could be worded to be compatible with shared data governance, then you have a chance of the data that flows from that data collection will be possible to bring together as part of a wider aligned insight datasets.

In all the standards I’ve worked on, the ‘Helpdesk’ team have been as vital as the documentation and schema to making standards truly work as tools of coordination and collaboration.

.png?table=block&id=5b07494e-819f-4856-bd41-0809091730be&width=1820&cache=v2)